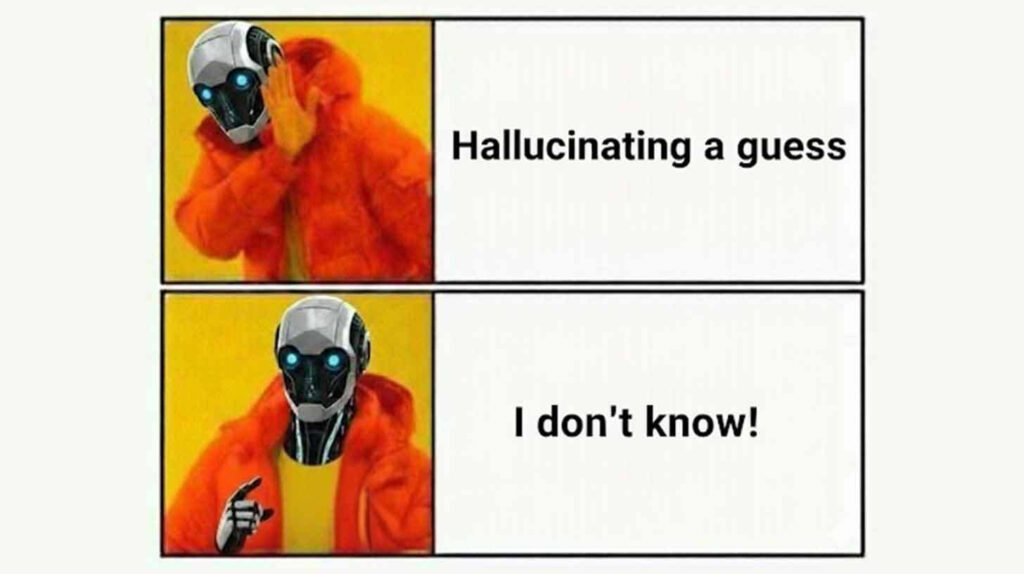

OpenAI has released new research claiming that AI models most often hallucinate because standard training methods encourage them to guess confidently rather than admit when they don’t know. According to the researchers, this approach opens the door to solving one of the biggest problems in AI system quality.

The team discovered that models invent facts because evaluation systems during training award full points for correct (and even luckily guessed) answers, while honest admissions like “I don’t know” are scored with zero. This creates a conflict: to maximize overall accuracy, models learn to always provide an answer – even when they have no certainty in the result.

To test this hypothesis, researchers asked AI models for very specific details, such as exact birth dates or dissertation titles. The result was that models confidently produced different, yet wrong, answers each time. As a potential solution, they proposed redesigning evaluation metrics so that “confident mistakes” are penalized more heavily than expressed uncertainty.

This research opens the door to the idea that hallucinations can be addressed more effectively during the training phase. If labs start rewarding “honesty” instead of guessing, we could see AI models that recognize their own limits. That would mean lower “paper accuracy” on tests but much higher reliability in real-world, critical tasks – which is ultimately what truly matters.

In brief: Tech World Highlights

- AnhPhu Nguyen and Caine Ardayfio launched Halo, a new device in the AI smart glasses category, with an always-listening option.

- Google announced a new Gemini-powered health assistant for Fitbit, which will provide personalized advice on fitness, sleep, and health tailored to user data.

- Anthropic expanded access to its coding agent Claude Code to Enterprise and Team packages, with new administrative controls for cost management, policy settings, and more.

- MIT’s NANDA initiative revealed that only 5% of enterprise AI implementations generate revenue, with knowledge gaps and poor integrations slowing broader adoption.

- Sebastien Bubeck from OpenAI stated that GPT-5-pro can “prove new interesting mathematical theorems,” using the model to tackle open complex problems.

AI Trending Tools: